How Linea AI Works

Linea AI uses machine learning to study historical events generated by the Cyberhaven platform and detect deviations from typical data flows.

At its core is the proprietary Large Lineage Model (LLiM), which evaluates the probability of each event and flags anomalous incidents. When LLiM detects a low-probability flow, it forwards the event to a large language model (LLM) that evaluates the semantics of the data movement and compares it against historical patterns to determine severity. Linea AI is deployed privately within each customer’s secure Google Cloud Platform (GCP) environment, ensuring strict data separation—each instance only analyzes the customer’s own historical events.

Example: Identifying Deviations in Data Flows

The scenarios below illustrate how Linea AI distinguishes between a normal data flow and one that deviates from expected behavior.

Normal flow

- Jane, the CFO, creates a sensitive file named

2024_executive_equity_awardsin a corporate Google Sheets document. - John, the Corporate Accountant, downloads the sheet as an XLS file.

- John attaches the file to an email and sends it from his corporate email account to the HR team.

In this case, LLiM determines there is a high probability that the flow matches historical behavior—sharing sensitive files internally via sanctioned tools such as corporate email. No incident is created.

Deviated flow

- Jane, the CFO, creates a sensitive file named

2024_executive_equity_awardsin a corporate Google Sheets document. - John, the Corporate Accountant, downloads the sheet as an XLS file.

- John attaches the file to an email and sends it from his corporate email account to the HR team.

- John later opens the sheet, copies text from it, and pastes the data into a Telegram chat window.

Here, LLiM detects a very low probability that sensitive data is being shared through an unsanctioned channel such as Telegram. LLiM forwards the event to the underlying LLM, which evaluates the semantics of the action and determines whether it has Critical or High severity. If no existing policy covers this behavior, Linea AI autonomously creates an incident to alert the security team.

Continuous learning

By continuously analyzing metadata—including file names, locations, user roles, and historical events—LLiM refines its understanding of both expected and anomalous behaviors. This allows Linea AI to surface deviations promptly, even without predefined policies.

Architecture

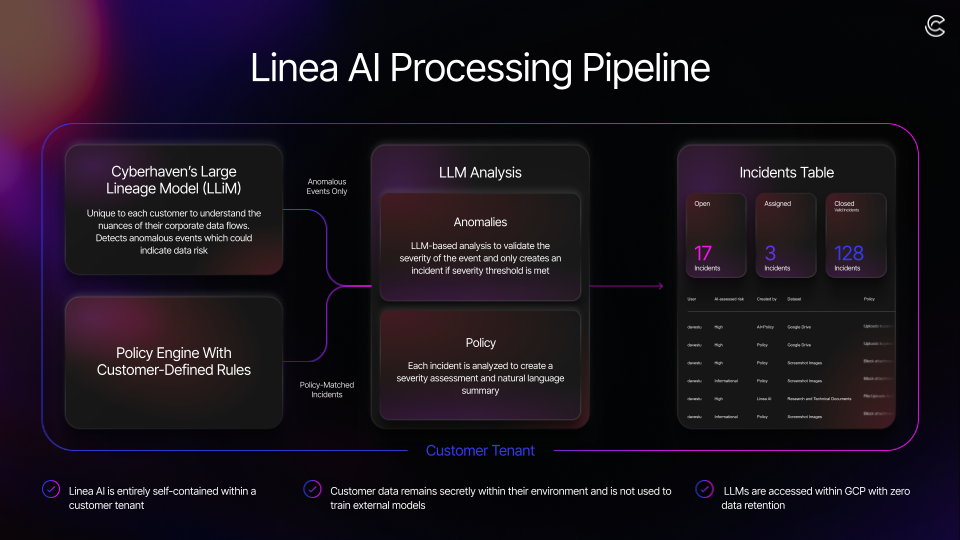

The architecture diagram below illustrates how LLiM and the LLM work together alongside the policy engine:

- LLiM runs independently and continuously, monitoring events for anomalies.

- When LLiM detects an anomalous event, it forwards the event to the LLM.

- The LLM compares the anomalous event against historical data, determines whether it meets the Critical or High severity threshold, and generates an incident with a natural-language summary when warranted.

- For incidents triggered by user-defined policies, the policy engine follows the same process—forwarding events to the LLM for risk assessment and summary generation.

Linea AI operates entirely within each customer’s GCP environment. Customer data is never used to train external models or models for other customers, and the LLM observes a strict zero-data-retention policy.